近年来,大众参与的软件创新与创业活动已经成为网络时代软件开发和应用的新形态,正在快速改变着全球软件创新模式和软件产业格局。系统地揭示这种网构化软件开发形态背后蕴含的核心机理,构建适应我国自主发展特点的软件创新生态环境,是当前我国软件产业发展面临的重大历史机遇。

在此背景下,国防科技大学、北京大学、北京航空航天大学、中科院软件所等单位合作开展了基于网络的软件开发群体化方法与技术研究,揭示了以大众化协同开发、开放式资源共享、持续性可信评估为核心的互联网大规模协同机理,与软件开发工程化方法相结合,系统地提出了基于网络的软件开发群体化方法,形成了网构化软件开发和运行技术体系,构建了可信的国家软件资源共享与协同生产环境(简称“Trustie”,中文简称“确实”)。

Trustie团队就是在此过程中不断成长的一个勇于探索、勇于创新、勇于挑战的科研人员组成的群体,其中包括大学老师、工程师、研究生和本科生。

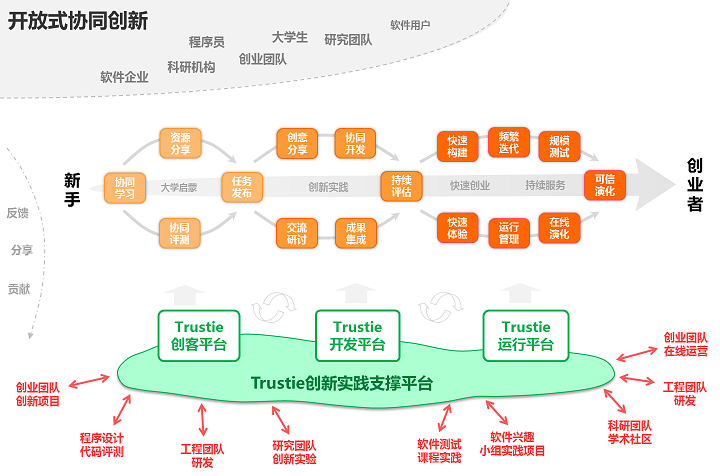

Trustie团队揭示了以大众化协同开发、开放式资源共享、持续性可信评估为核心的互联网大规模协同机理,与软件开发工程化方法相结合,系统地提出了基于网络的软件开发群体化方法,产生了三方面技术发明,构建了可信的国家软件资源共享与协同生产环境(简称“Trustie”,中文简称“确实”),形成授权发明专利26项、软件著作权38项、技术标准(或提案)7项,发明人受邀赴国内外重要学术会议做主题报告20余次,如图1。

图1 Trustie成果结构

项目探索形成了技术成果专利化、专利推广标准化、工具环境服务化、人才培养大众化的成果转化模式,为我国创新型软件产业发展提供了关键技术支撑和实践指南。Trustie显著提升了东软集团、神州数码、凯立德、万达信息等大型软件企业软件生产能力,支持了我国航空、航天、国防等多个关键领域的可信软件生产,在9个软件园区建立了公共创新支撑平台,覆盖2500余家软件企业,积累软件资源超过33万项,创建了知名国际开源社区,支撑包括国家核高基重大专项、国际合作项目、教育项目等2560余个软件项目的群体化开发,在100余家高校的软件人才培养中得到广泛应用,各类用户超过28万人。

项目的两项子成果已分别获得2013年度湖南省技术发明一等奖和2012年度教育部高等学校科学研究优秀成果奖科学技术进步一等奖,并于已通过2015国家技术发明奖二等奖初评。

图2 Trustie2.0软件创新创业服务平台

目前,项目组已在网构化软件协同构造、运行管理、可信评估、持续演化等方面实现了一系列新的突破,提出并建立了网构化软件创新和创业的应用模式及支撑平台Trustie2.0,如图2。项目组正充满信心、刻苦攻关,为我国创新型国家建设而奋斗!

| * Trustie实践教学平台 | * Trustie协同开发平台 |

| * Trustie开源监测与推荐平台 | * Trustie可信资源库平台 |

| * Trustie服务组合开发平台 | * Trustie可信评估与增强平台 |

<p> 之前ICSE被拒主要原因,供大家参考: </p> <p> 1. Intro部分冲突不够,少了很多数据支撑,评审容易质疑你的背景出发点是否有意义 </p> <p> 2. 文章里没有明确研究问题,评审容易提出质疑 </p> <p> 3. 研究的意义和贡献,包括实际价值没有讲清楚 </p> <p> 4. 模型存在小的问题,虽然评审没有发现 </p> <p> 5. 存在很多冗余的信息 </p>

<p> 几点小经验: </p> <p> 1. Intro部分通过一些实际的数据、统计信息、先验知识制造好冲突(基于尹老师的建议) </p> <p> 2. Intro部分交代好具体研究问题、意义和贡献(基于王老师的建议) </p> <p> 3. 实证研究最好提供分析和实验的相关代码、数据 </p> <p> 4. 实证研究最好定性(问卷调查等)、定量(回归分析等)相结合 </p> <p> 5. 回归模型要选取准确,不同类型的参数需要仔细处理(例如重复显著性检验问题),对于回归结果最好有很好的解释 </p> <p> 6. 文章最后最好给出对研究者、开发者等不同受众的建议,体现实际意义 </p> <p> 7. 一些结论性的语句最好用框圈起来 </p>

如题,只有英数,现在有些账号“.”特殊字符,导致项目版本库无法访问

重现:在输入正常用户名点提交,页面加载时再更改用户名,点提交,后台会保存修改后的用户名

我创建了一个测试库,显示Git库的地址是xxx/xphi/xxxx

但是去到Git中看实际上是xxx/sunbingke/xxx

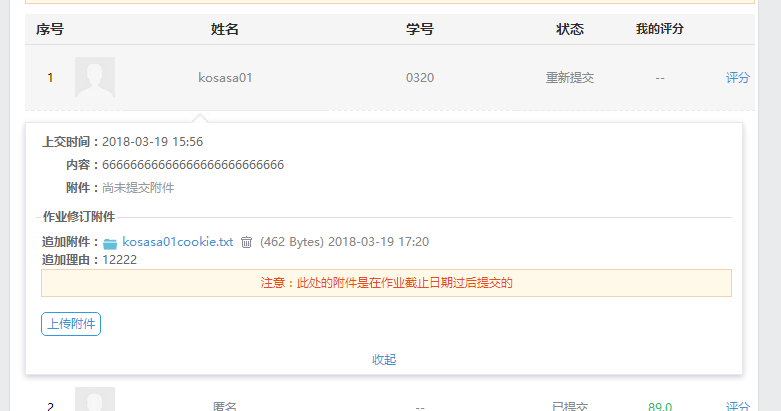

1.匿评申诉的消息,在小铃铛的数字提示中缺失,即该类新消息没有小铃铛数字提示。请补上

2.作业的评分回复(含匿评的回复),需要增加消息提醒:

您的评阅有新的回复:XXXXXX

自己的

别人的

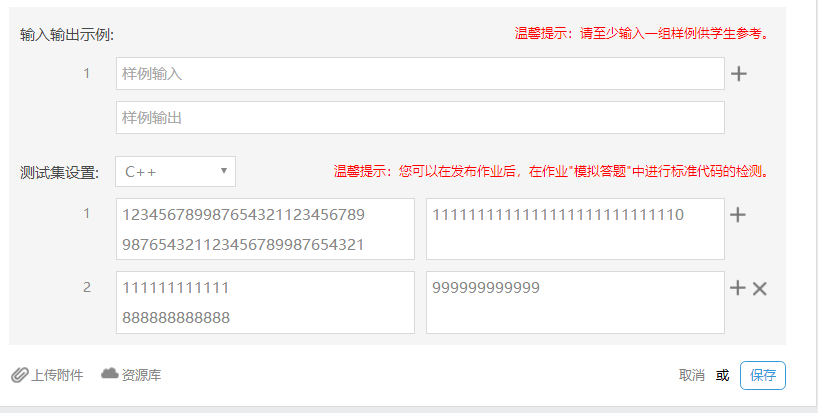

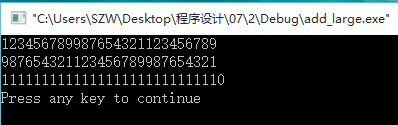

本题为选作题目,但建议都尝试完成!

1.任务

实现和不超过100位的超长整数加法。从键盘输入任意两个不超过100位的十进制超长整数(和不超过100位),输出相加的结果。

2.提示:

用字符数组存储两个长整数,模拟竖式加法,可考虑联合使用整除“/”和求余“%”实现进位加。

3. 示例:

输入:

123456789987654321123456789

987654321123456789987654321

输出:

1111111111111111111111111110

输入输出样例:1组

#11

1